Overview

Activation maximisation is a locally explainable method that focuses on input patterns which maximise a given hidden unit activation. Activation maximisation helps understand the layer-wise feature importance to an input instance.

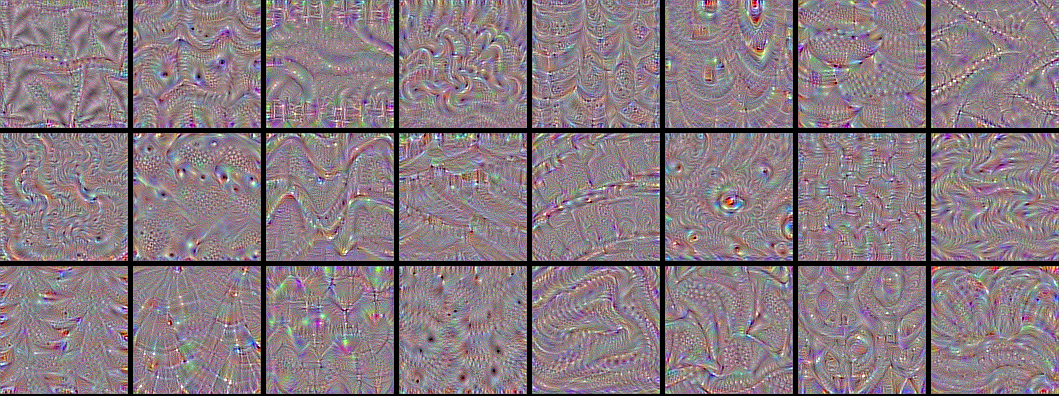

How convolutional neural networks see the world

How convolutional neural networks see the world

Let be the parameters of the model, and be the activation of a particular unit from layer . The activation map can be defined as the problem:

where is fixed.

Algorithm

The above process consists of four steps:

- An image with random pixel values is set to be the input to the activation computation.

- The gradients with respect to the noise image, , are computed through backpropagation.

- Each pixel of the noise image is changed iteratively to maximise the activation of the neuron, guided by the direction of the gradient:

- This process terminates at a specific pattern image , which can be seen as the preferred input for this neuron.

References

- Erhan, D., Courville, A., & Bengio, Y. (2010). Understanding representations learned in deep architectures.

- Qin, Z., Yu, F., Liu, C., & Chen, X. (2018). How convolutional neural network see the world-A survey of convolutional neural network visualization methods. arXiv preprint arXiv:1804.11191.

- https://blog.keras.io/how-convolutional-neural-networks-see-the-world.html